Collection of the metrics that the queue manager publishes requires that each monitored queue has at least one associated subscription. This post describes an interesting option where collection programs use durable subscriptions to minimise object handle use when running MQ monitoring. It reduces the requirements for configuring the MAXHANDS attribute on the queue manager. It’s also a nice demonstration of how subscriptions could be used in any application.

Metric Subscriptions

I’ve written before about collecting metrics for storage in a database like Prometheus and visualising them in Grafana. The way that the queue manager produces these metrics means that the collection program has to subscribe to separate topics for each queue. The subscription format does not accept wildcards. The “obvious” way of managing these subscriptions is to make a non-durable subscription, so that publication of the events automatically halts when the collector program ends. And that is how I coded the collection routines.

The programs also normally use a model queue definition to set up the destination queue for the publications. They don’t ask for a managed subscription (where each publication ends up on a different queue), but use the model queue to create a single dynamic queue. The MQSUB call then refers to that dynamic queue. Again, this helps with cleanup after the collector ends – not only are the subscriptions deleted, but so are the associated dynamic queues.

With this approach, every MQSUB uses an object handle to describe the subscription. This is not inherently a problem – a queue manager can deal with many thousands of object handles in a single program. But it can require a change to the default configuration.

Maximum Handles on a Queue Manager

The intention of the MAXHANDS attribute on a queue manager is to catch certain kinds of badly-behaved applications, such as those that loop continually opening queues and never closing them.

This is something I saw recently in an application that had been converted from using a C++ interface to using the C MQI directly. The C++ layer had destructors that automatically closed a queue when the object fell out of scope; in C, you have to remember when to do that yourself.

The attribute controls how many open handles each connection can use. The default value of 256 is sufficient for the vast majority of applications. But with the monitoring programs, that default is likely far too small. In fact, I added some code to the programs to detect the current configuration and suggest a better value if it appears too small.

Unfortunately there is no application-specific override possible; the setting applies to all applications on the queue manager. Changing the number does not affect things like performance, but it does change how quickly the queue manager might detect a rogue application. There is also no overall maximum handles value applied across the queue manager as a total for all applications. The attribute is per connection.

I started hearing from a number of users of the metric collectors who were trying to monitor many (possibly thousands of) queues, but who were reluctant to change the MAXHANDS value for their systems. Could we do anything to help?

Durable Subscriptions

A durable subscription persists after its creation, outside the lifetime of any application, so that messages continue to be delivered and can be retrieved later. Usually an administrator creates these subscriptions using an MQSC command, but programs can do it directly using the MQI. The normal reason for using these is so that publications are not lost, even when a retrieving application fails and must restart.

The trick

I can’t take credit for the core idea here – it actually came from David though I had to work out how to fit it into the collector structure.

Essentially, we programmatically create a durable subscription and then immediately close the object handle. Although the handle is no longer active, messages continue to flow to the destination queue. It’s a similar effect to using an administered subscription, created using the DEFINE SUB MQSC command, but under application control. This means that the active handle count does not increase with the number of monitored queues, and that the default setting of MAXHANDLES should be good enough.

Updating the metric collectors

I tried a few experiments to check that the idea did actually work, and then converted the monitoring packages to exploit it. The new options appear from version 5.3.0 in the mq-metrics-samples repository.

I tend to use the Prometheus collector as the primary test environment, but the changes were in common code and therefore should work in all the other collectors in that repository.

Configuration for the metrics collectors

Some additional configuration options control the behaviour. You can supply these options to the collector programs in the usual varied ways – a YAML file, command line options or environment variables. The default configuration still uses the non-durable subscription approach; these new/modified options in the connection section change it:

durableSubPrefixSetting this string switches to the new approach. The prefix is used to identify which durable subscriptions are associated with this collector. If you have two collectors running against the same queue manager, then the value must be different for each collector.replyQueue: This is usually set to the name of a model queue. For durable subscriptions, it must name a real local queue.replyQueue2: This must also be given and be a different real local queue.

The config.common.yaml file in the root of the repository shows how and where to set these options.

Showing the differences

I used these commands to extract statistics from the collector running in various configurations on my machine:

# Total handles echo "DIS CONN(*) WHERE (APPLTAG eq 'mq_prometheus') type(handle) " |\ runmqsc QM1 |\ grep OBJTYPE | wc -l # Non-durable subcriptions echo "DIS SUB(*) WHERE (DURABLE eq no) topicstr" |\ runmqsc QM1 |\ grep -i TOPICSTR |\ grep Monitor | wc -l # Durable subscription count echo "DIS SUB(*) WHERE (DURABLE eq yes) topicstr" |\ runmqsc QM1 |\ grep -i TOPICSTR |\ grep Monitor | wc -l # Count of monitored queues echo "DIS SUB(*) TOPICSTR |\ runmqsc QM1 |\ grep TOPICSTR|\ grep GENERAL| wc -l

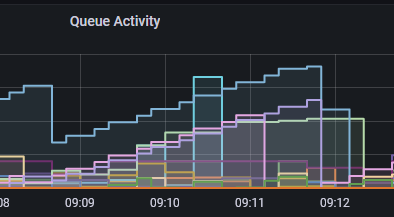

and it gave me these numbers for different queue monitoring patterns:

| Handles | ND Sub | D Sub | |

|---|---|---|---|

| Non-dur config (10 queues) | 47 | 43 | 0 |

| Non-dur config (65 queues) | 212 | 208 | 0 |

| Dur config (10 queues) | 17 | 13 | 30 |

| Dur config(63 queues) | 17 | 13 | 189 |

You can see how increasing the number of monitored queues does not affect the total number of in-use handles with the durable configuration, just the total number of subscriptions. This configuration still uses some non-durable subscriptions for reading the queue manager-wide metrics, but this is a small number and I didn’t bother converting those to durable subscriptions.

If you define new queues or delete existing ones that match the selected patterns, then the collectors notice this at the rediscoverInterval period. They then modify the subscription list to monitor the changed set.

The discrepancy between the 63 and 65 in the table is because the configured patterns picked up the dynamic queues used by the non-durable case; the durable option uses local queues whose names don’t match the patterns I was monitoring.

Is there a downside?

The principal drawback to using durable subscriptions is the lack of automatic cleanup. If the collector program ends, then publications continue to be generated and delivered to the target queues. This can result in the queues filling up, and you might start to get alerts or error log entries because of it.

I have provided scripts/cleanDur.sh as a simple script to help cleanup if you do get into this situation and want to stop more publications. Simply give the queue manager name and the durableSubPrefix value.

Startup of the collector program is likely to be slower because it needs to reset the subscription list to match its configuration, but I don’t expect any other different performance characteristics.

Summary

Closing a resource that you are apparently using feels unintuitive, but this technique does show the separation between application and subscription given by the durability option.

This post was last updated on June 30th, 2022 at 07:23 am

Brilliant, I’m really looking forward to trying this out in our test environment.

Perhaps a tip to prevent the queue from filling up unwantedly (either because Prometheus is not fetching the data or the collector programme has stopped) is to use CAPEXPRY if losing old monitoring data is acceptable.